What Is Kubernetes? An Introduction to Container Orchestration Tool

Kubernetes is an open sourced platform for the container orchestrations. This is especially for the managements of apps build out of various huge self-contained run-times. We simply call this as the containers. The Dockers containerization projects launched in the year of 2013. But the distributed containerized apps can become difficult for coordinating. By making the containerized apps easiest to manage at the scale. The Kubernetes have become as the key parts of the containers for revolutions.

Containers orchestration

The container supports VM-like separations of the concerns. But this is with less as well as greater flexibilities. As the result, the container has reshaped as the way which people thinks about the following. They are,

- Developing.

- Deploying.

- Maintaining the software.

In the containerized architecture, it will have different services. These services will constitute as an app. These will pack into the separate containers. Also, it will deploy across the clusters of the physical / virtual machine. But this will give rise for the need of the container orchestration. A tool which will automate the following. They are,

- Management.

- Deployment.

- Networking.

- Scaling.

- Availability of the container-based apps.

What is mean by the Kubernetes?

Kubernetes is a project which is open source. This has become as most popular containers orchestration tool. It allows to deploy as well as manage. Especially, to the multi-container apps at the scales. While practicing Kubernetes, it often used with the Docker. It is most popular containerizations platforms. Also, this can work with any containers systems. This will conform to the OCI standard for the containers image format & run-times. This is because the Kubernetes is an open source. Especially, with relatively some restrictions. This will base on how we can use it. Anyone can use this freely by who want to run the containers. This will be almost anywhere that they would like to run on-premise in public clouds or both.

Google & Kubernetes:

Kubernetes began the life as project within the Google. It is a successor but not as the direct descendent of the Google Borg. In earlier stage container management tools, we can internally use the Google. The Google open source the Kubernetes in the year of 2014. This is because distributed micro-services architecture which Kubernetes facilitate and make it easy. This is specially to run apps in the clouds. The Google sees adoptions of the containers, micro-services as well as the Kubernetes. This is potentially driving the customers to the cloud services. Kubernetes will currently maintain by Clouds Native Computing Foundations. This itself under the umbrellas of Linux Foundations.

A side by side comparison of Kubernetes with Dockers & Docker Swarms:

Kubernetes will not replace the Dockers but augments this. Kubernetes can replace some technologies which are higher-level. These technologies will have an emerged around the Docker.

One of the technologies is Docker Swarms. An orchestrator may bundle with the Dockers. Still it is possible that we can use Docker Swarms instead of the Kubernetes, but the Dockers Inc. has chosen for making the Kubernetes. This is as a part of Docker Community. Also, the Dockers Enterprise editions also goes forwarded.

Not that this Kubernetes is drop-in the replacement for Dockers Swarm. The Kubernetes is more complex than the Swarm. This will require more work for deploying. But the works are intended for providing the big payoffs. This will be in long run, manageable, resilient apps infrastructures. For the development work, & smaller containers cluster, Dockers Swarm presents simpler choice.

A side by side comparison for the Kubernetes and Mesos:

One of the best competitors to the Kubernetes is the Mesos. The Mesos is the project of Apache. This will originally emerge from the developers at the Twitter. We can see this as answer for the Google Borg projects.

The Mesos will offer the containers orchestration service. But their ambitions would go beyond. It will aim at sort of the cloud OS. These coordinates both the components. Following are the components. They are,

- Containerized component.

- Non-containerized component.

At the end, lot of different platform can run in the Mesos. This may include the Kubernetes also.

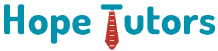

Kubernetes architectures: How Kubernetes will work:

The Architecture of Kubernetes will make use of many concepts. It will also make use of the abstractions. Some are variations on the existing & familiar notion. Other are specific to the Kubernetes.

Kubernetes cluster:

The higher-level Kubernetes abstractions, the clusters. It will refer to the groups of the machine running the Kubernetes. Also, the containers are managed by this. The Kubernetes clusters must have the master. The systems will command as well as control all other machines of the Kubernetes. Highly availability of the Kubernetes clusters replicates master’s facility. This will be across many machines. But we can say that one master at the run time of job scheduler. It will also run the controller-managers.

Kubernetes node as well as pod:

Each cluster contain the Kubernetes nodes. It may be physical machine / VMs. Again, this idea is an abstraction. Whatever this apps are running; the Kubernetes will handle the deployment. Kubernetes makes this possible. This is to ensure certain containers will run only in the VMs. This may also run only on the bare metals.

Node will run the pods. The basic Kubernetes object can create / managed. Each of the pod represents each single instances of the apps. It will also run the process in the Kubernetes. It will also consist of one / more containers. The Kubernetes start, stop, & replicate all the containers in pod as group. Pods keeps the user’s attentions on the apps. This is rather than on containers themselves. The details about the Kubernetes needs for configuring. This is from state of the pods. It will keep in the Etcd, distributed key-values store.

Pods will create as well as destroy on nodes. This is as needed for conforming to desired states. These will specify by user in the pod’s definition. The Kubernetes will provide abstractions called as the controllers. This is for dealing the logistics about how pods will perform the following.

- Spun up.

- Spun down.

- Rolled out.

The Controllers comes in few various flavors. This depends on kind of app which we manage. For instances, recently they introduced “StatefulSet” controllers. This is used for dealing with apps that needs the persistent states. Another kind of controllers, the deployments, is used for scaling an app up / down. This will update the apps to the newer versions. This will also perform roll back the app. This is to know the good versions, if there is problem.

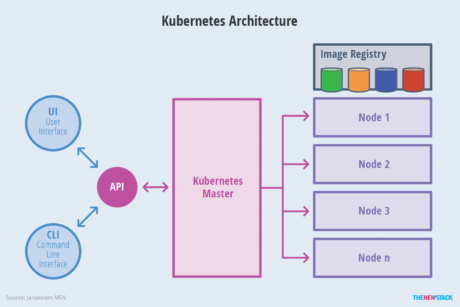

Services of the Kubernetes:

Because of that pods lives & dies as they needed. We need some different abstractions for dealing the apps lifecycle. An app is supposed for the persistent entity. This is even when pods run the containers which comprise the app. They are not persistent by themselves. At the end, it will provide abstractions called as service.

The service in the Kubernetes describes about how the given groups of the pods can access. Especially this is via the networks. As Kubernetes documents put it, pods constitute the backend of an app. This may change, but front-end should not know that / track it. The services make it possible.

Few more internal pieces to the Kubernetes around out of the pictures. Scheduler parcel out of the workloads to the nodes. So, they can balance across the resources. So, deployment meets the requirement of the app definition. The controller managers ensure the state of system app workloads, etc. This will match the desire state defined in the Etcd configuration setting.

It is very important to have in mind none of lower-level mechanism is used by the containers. For Example: Docker itself will replace by the Kubernetes. Rather, the Kubernetes will provide large set of abstraction just for using this mechanism. This is for sake of keeping the running apps at the scale.

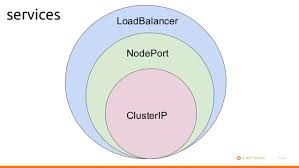

Ingress of the Kubernetes:

The services of the Kubernetes will think as running in the clusters. But you want to access such services from outside of the world. The Kubernetes has many components. These will facilitate with the varying degrees for the simplicity & robustness. This will include NodePort as well as LoadBalancer. But component with most flexibility is known as Ingress. The Ingress is just an API. This will manage the external access for the cluster services. This is typically through HTTP.

The Ingress must require bit of configurations to proper set up. Matthew Palmer wrote book on the Kubernetes developments. He steps us through process on the website.

Dashboard on the Kubernetes:

A Dashboard is One of the Kubernetes component. This will help you to keep on the top when compared with the other components. This is UI which is web-based. Using this you can able to deploy as well as troubleshoot apps. Also, you can manage the cluster resource. The Dashboard will not install by default.

Advantages of the Kubernetes:

Kubernetes introduces a new abstraction as well as concept. Also, learning curves for the Kubernetes is very high. It is normal to ask about the long-terms payoff used by the Kubernetes. Here is rundown of few specific ways. These ways will run the apps inside the Kubernetes. This will be very easier.

Kubernetes would manage replication, app health, load balancing & hardware resource which is allocation for you:

The basic duties of the Kubernetes are busywork in keeping the app up, run & response to the user demands. The apps become very “unhealthy,” / do not conform to definition of the health. That is what you describe. You can automatically heal.

Another benefit of the Kubernetes is increasing the use of the hardware resources. This will include network bandwidth, storage I/O, and memory. Apps can have many soft as well as hard limit on their resource usages. Many apps which use the minimal resource can packed together in the same hardware. The apps which need to stretch can place on the systems. There they have a room for growing. Again, rolling back the updates across the clusters. Rolling the updates back if there is a update break is also possible automatically.

Easy deployment for preconfiguring apps with the Helm Charts in the Kubernetes:

Package managers like APT of the Debian Linux APT & Pip of the Python will save users. They will save from troubles of the manually installing & configuring. It is handy. Especially, this is when an app has various external dependencies.

The Helm is package manager for the Kubernetes. Many famous software app runs in the Kubernetes. This is as group of the interdependent container. The Helm will provide definition mechanism, “chart”. This will describe how an app / service can run as group of the containers. This will be inside the Kubernetes.

Also, you can able to create your Helm charts with the help of the scratch. This is if you are building the custom apps for internal deployment. But if you are using the popular app that has common deployment patterns. Then there is very good chance for someone who has already composed the Helm charts for this. Also, publish this in official Helm charts. Another place for looking the official Helm chart is Kubeapps.com directory.

The Kubernetes simple management for storing, secrets, & other apps-related resource:

Containers are immutable. Whatever put in them is not supposed to be change. But apps need the state, meaning which they need reliable ways to deal. This is with the external storage volume. That will make more complicate by the way the containers live & die. Also, they are reborn in lifetime of the app.

The Kubernetes will provide abstraction to allow the containers. Also, the apps for dealing with more storage in same way. Many common storages from the Amazon EBS volume to plain the old NFS share. It can access through the Kubernetes storage driver which is called as the volumes. Normally, these volumes are bound with a specific pod. But the volume sub type called as “Persistent Volume” which can use for the data. The data will need to be live on any pod.

Container often needs to work with the “secrets”. The credentials such as API key / service passwords which you do not want the hard coded. Especially, into the container / stashed openly in the disk volumes. While the 3rd party solutions which are available to this such as Docker secrets & HashiCorp Vault. The Kubernetes has own mechanism. This is for handling the secrets. Although this will need to configure with the care. Etcd must configure to use the SSL/TLS. This is when sending the secrets. This will be between the node, rather than in the plain text.

Kubernetes apps can run in the hybrid & multi-cloud environment:

One long-standing dream of the cloud computing is to run the app. Especially in any of the clouds / in mix of the clouds. This will be either in the public or in the private. This is not just for avoiding the vendor lock-in. Also, taking advantage of the features which is specific to the individual cloud.

The Kubernetes will provide set of the primitives. Especially, collectively called as the federation. This is for keeping various clusters in the sync. For instance, given apps deployment can keep consistent. This is between various clusters & different clusters. We can share the service discoveries. So, the back-end resources can access from any of the cluster. Also, the federation can be used for creating highly available / fault-tolerant deployments. Whether / not you are spanning various cloud environments.

Still the federation is new to the Kubernetes. All the API resources will not support across the federated instances. Also, upgrades do not have testing infrastructure automatically. But the shortcomings will slat. It is to address in the future version of the Kubernetes.